In this worldwide lockdown, virtuality has over powered the reality . The ultimate drawback of this virtuality is that a person does not need to be present physically to mark his presence his picture or even his signature are enough to mark his presence.

But the main point here is that Is virtual identity is enough to authenticate one’s identity? It’s a big NO. For instance, in many movies nowadays this technology is so common. To perform complex stunts, sometimes actors are not capable or for some reason they are not able to perform a particular scene in a movie, then using a technology known as Deepfake, it is possible to even show viewers that stunt is performed by the real actor.

Deepfake as the name suggest it is something related to spoof or fraud. This word is formed by two words Deep Learning(for Deep) and impersonating(for Fake). Using this technology, you can absolutely change the appearance, behavior or anything that can be manipulated within that picture with the help of Deep Learning. For those who are not aware what actually deep learning is, relate it by the word itself deep which means learning in parts. This technology relates to a network of translatory machines that are driven by Artificial Intelligence and Machine learning to make the user’s input understandable by the machine in a more compact way, so that machine becomes more user friendly to the humans.

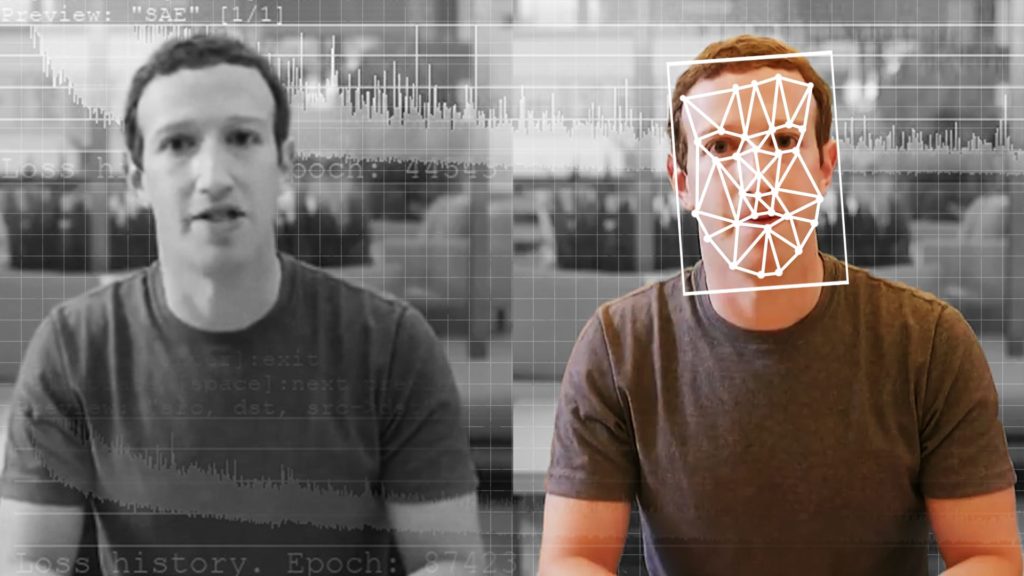

This picture well explain the concept of Deepfakes:

Until now, you read the advantages of Deepfake. But big giants like Google, Facebook are really struggling to get rid of this technology because some hackers and bad actors are using this technology to do malicious task. One of the example that shows that this technology is a curse is it’s use in pornography. Some people who don’t like the fame earned by reputed actors, they use this technology to expose that actor, thus making a revenge porn and all the people around the world makes fun of that. But some researchers from Boston University finally found a technique that can help you get rid of this. See how !

THE RESEARCH

Some researchers from Boston University have developed an alogorithm to fight with malicious use of deepfakes. They have developed a technique from which if any user tries to modify a particular picture using deepfake, that image will either destroy or it will be of no use to that user.

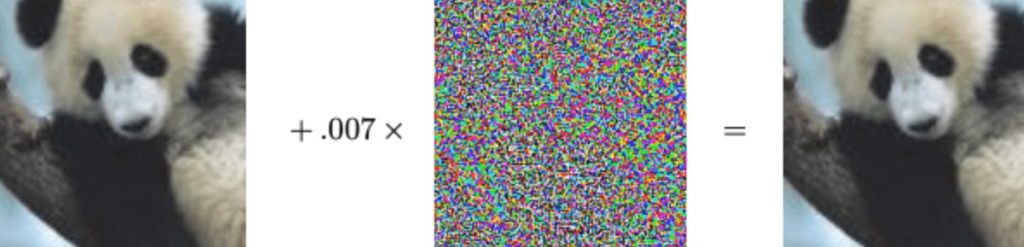

To reserve the integrity of a picture, they used a method known as Fast gradient sign method. Using this method, the user who inputs the picture, maximizes the distruptions in a given picture. The disruptions which are made to the picture cannot be distinguished by a normal human eye. This is the conventional attack used by the attackers. Given below is the picture to explain the given fact:

However researchers use this for a good cause. They used this attack to put imperceptible mask on the input picture. This imperceptible mask is nothing but disruptions in a picture. The disruptions level in that picture are measured by the magnitude of epsilon ε(a parameter that defines transparency of a material). Thus resulting image is known as adversarial image. As you know if already a disturbed substance is given more modifications, it no longer exists in its state. Similar is the findings as done by these researchers, described in the given video.

As you can see ,they introduced a concept of StarGAN, which stands for generative adversarial networks which is a machine learning neural network which is used to to generate new data with the same statistics as the training set. In simple language, to produce a similar character but with different trait, that is what Deepfake means.

CONCLUSION

Researchers who explored this algorithm also made it open source so that every developer can take the advantage of this. The link for the github repository can be found out by clicking here. At the end, this deepfake technology has been emerging more as a threat to the user and organizations rather than an invention since its development. If this above mentioned algorithm is implemented properly, then many malicious actions can be prevented to an extent.