The development of scripting languages in the late 90s is the great revolution for developers that have blessed them with serving dynamic content to their users. In the last 15 years, the scripting languages have change the way how internet or we can say World Wide Web (WWW) looks. Obviously, the main backend framework is the most important part of a web project but client side scripting or front end developing is also given great important nowadays. Thus, Full Stack developers are in great demand as compared to the specific side developers.

Great Deal: Enroll in The Complete Web Developer Course 2.0 at just $11.99.

It is absolutely agreed that scripts help in generating dynamicity, but they are also helpful in providing some metrics that are helpful for webmasters to improve their SEO. Every webmaster deploys some sort of Javascript in web project in order to track visitors. Based on the end user’s browser and embedded functions in the javascript, the webmaster attains some information that help them in improving the user experience . This technique also known as Browser Fingerprinting.

According to User’s Privacy Act, it is legal to collect some sort of data with the proper consent of the user but not all the data which the user has not given consent to. In state tracking, when you visit a legal site that asks for cookies, you can even deny the tracking cookies that you don’t want to track. But in a stateless tracking, user’s don’t have any control over the tracking. In this method, client side scripts like Javascript is used to track the user’s information for example Google Analytics tracks the user information through Javascript. Similary, web developers can deploy their own scripts and embed functions like Battery API, Screen resolution API, graphics API to track personal information about the user’s device, thus raising privacy issues.

Web browsers like Tor and Firefox, prevent these privacy issues to an extent and block these scripts. But as we all know, computers and servers are just dumb machines and every instruction needs to be given before they are actually implemented. Just as signature of a virus changes by adding a string, if these browsers are designed to block a particular script, they will not block any other familiar similar script that functions same but looks different.

A group of researchers from Mozilla, the University of Iowa and the University of California have detected these hidden identification scripts in 100 thousand most popular sites according to Alexa rankings showed that 9040 of them (10.18%) and if we cut the number to just top 1000 popular sites then this percentage of the website caught with hidden identification scripts increases abruptly to 30 percent i.e. 266 websites. Let’s see their findings and facts.

THE FINDINGS AND FACTS

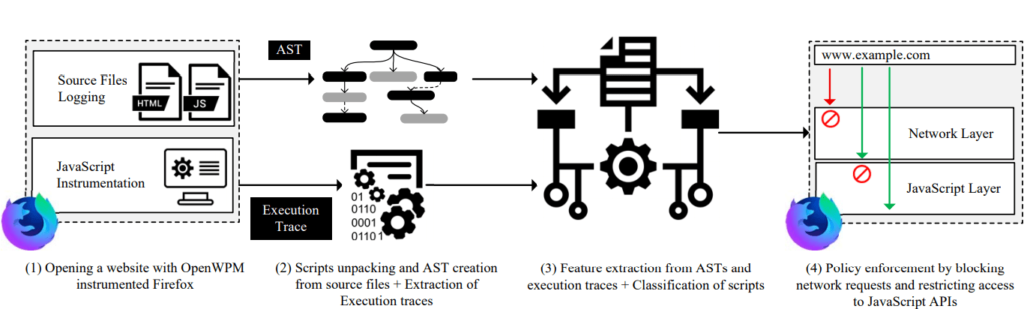

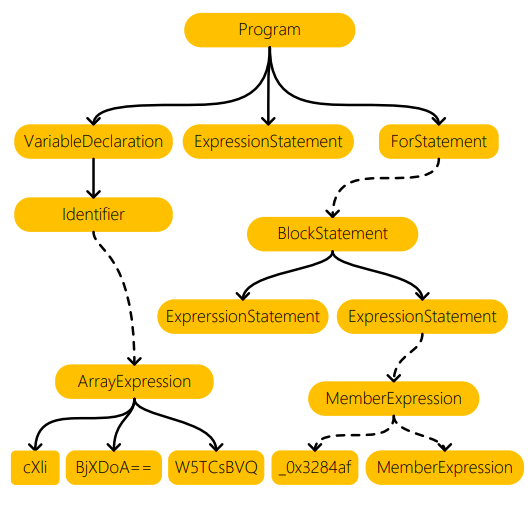

To identify the code that performs hidden identification, the FP-Inspector toolkit was used, the code of which is offered under the MIT license. The toolkit uses machine learning techniques in combination with static and dynamic analysis of JavaScript code. Using the OpenWPM, they analysed the websites with such scripts. The diagram which explains the whole process:

It is argued that the use of machine learning has significantly improved the accuracy of detecting code for hidden identification and revealed 26% more problematic scripts that were not previously detected with the predefined parameters.

According to one of the researchers from the team, Umar Iqbal, who give the presentation about this research defined some problems which they met during static analysis:

- During static analysis, it become dffiucult for them to properly identify obfuscated or inline functions and it is only possible to detect it through dynamic analysis.

- The number of API calls made by the script could only be detected through dynamic analsysis.

After they collecting results from both static and dynamic analysis, they used tree algorithm to finally detect the scripts which are collecting hidden information.

The actual presentation given by the researcher is given below:

CONCLUSION

It is a known fact that trackers and infostealers are everywhere in the world wide web. We believe that some sort of information is needed by webmasters to improve the user experience but that information should not include personal information about the user. If it is so, then there would not exist any difference between infostealers and trackers. It is therefore suggested for webmasters to collect only such information which user has consented to.

Disclaimer: The above suggested deals are from third party vendors and prices mentioned for the above deals may or may not change at the time you buy. We are not responsible for the change in price after 24 hours.