The most promising technology that people all around the world is working upon is the Artificial Intelligence. This word really misleads what human intelligence means and we as humans think that AI is more capable than us. In these modern day technologies we are making computers capable to identify the real humans, though we should not forget the fact that we humans are the one who are developing algorithms to make a dumb system to act as human. If we see our last decade, there is merely any industry left where automation in not being introduced, starting from tourism to IT and it is predicted that in the upcoming decade, the automation will even replace the remaining manpower.

Though automation and machine learning has helped us a lot but we should not forget the fact that if we human intelligence can make a mistake, machines are too vulnerable to that mistakes. The matter of fact lies here that if we can make machine learn for our good cause some bad actors can teach that same machine to think in the reverse.

Recently, we presented a research from Boston university in which they developed an alogorithm which disrupts deepafake. In this article, we mentioned how they used adversial attack to preserve integrity of the image so that image can not be modified further. Another research has been conducted by SAND university to protect user’s privacy using this technique. Let’s explore their research.

THE RESEARCH

Their research laid emphasis on the fact that services like clearview.ai let a user search about a person with the help of just a photograph. By this means, if an attacker has an image with correct pixels of his targets, he can easily explore all your social profiles to which you are connected to. This is the major concern of privacy as anyone who does not have a connection with you using some deep learning algorithms can plan a more sophisticated social engineering attack against you.

They find a solution of cloaking an image against this concern. What it means that to introduce some distortions to image like adding some noise, some more cluster of pixels to an image so that it fails to pass the detection of deep learning algorithms.

To understand this concept, analyze the below diagram

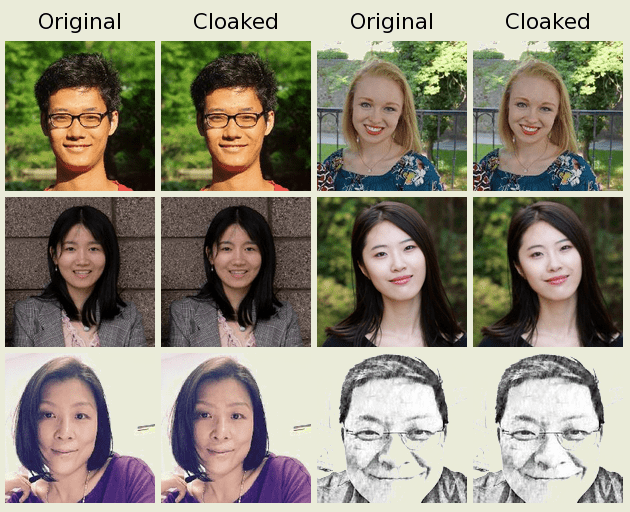

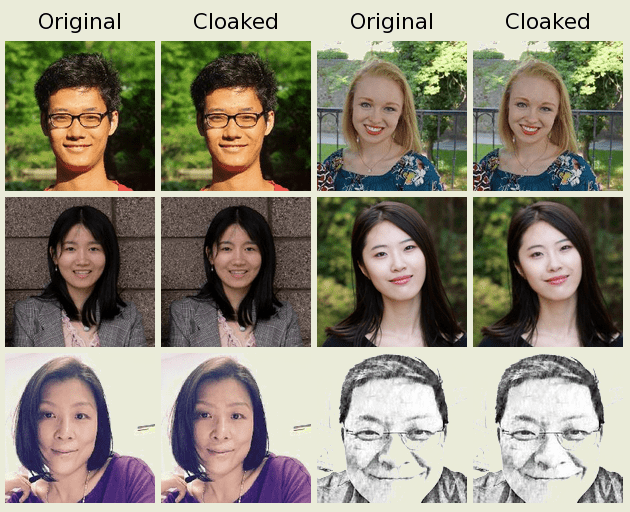

As you can see, according to the data entered by the user, the tool Fawkes will simply add some distortion in the image with the help of an deep machine algorithm which is undetectable by human eyes. So that when an attacker tries to detect photographs that violate the correct construction of machine learning models, it will simply get fail. The below image shows how cloacked image and original image looks:

This method is proven to provide 95 percent protection from machine learning models and some like Microsoft Azure recognition API, Amazon Rekognition and Face ++ fails to absolutely undetect that image. It is even said that if after many tries of testing a distorted photograph, if an attacker tries to test the original image against its machine learning model, even it too get fail. An explaination video by the researchers who found this algorithm:

CONCLUSION

The researchers also published the binaries of this tool on their github account. This tool is written in Python to make it more powerful. We all know that due to extensive use Artificial Intelligence in extensive applications today, anyone can gather information about you with very less number of resources. This tool proves to be an effective countermeasure against this concern.